- Prerequisites

- Pull ThingsBoard PE images from docker hub

- Step 1. Clone ThingsBoard PE K8S scripts repository

- Step 2. Define environment variables

- Step 3. Configure and create GKE cluster

- Step 4. Update the context of kubectl

- Step 5. Provision Databases

- Step 6. Configure license key

- Step 7. Installation

- Step 8. Starting

- Step 9. Configure Load Balancers

- Step 10. Configure Trendz (Optional)

- Step 11. Using

- Upgrading

- Next steps

This guide will help you to set up ThingsBoard in microservices mode in GKE.

Prerequisites

Install and configure tools

To deploy ThingsBoard on GKE cluster you’ll need to install

kubectl and gcloud tools.

See before you begin guide for more info.

Create new Google Cloud Platform project (recommended) or choose existing one.

Make sure you have selected correct project by executing the following command:

1

gcloud init

Enable GCP services

Enable the GKE and SQL services for your project by executing the following command:

1

gcloud services enable container.googleapis.com sql-component.googleapis.com sqladmin.googleapis.com

Pull ThingsBoard PE images from docker hub

Run the following commands to verify that you can pull the images from the Docker hub.

1

2

3

4

5

6

7

8

9

docker pull thingsboard/tb-pe-node:4.3.0.1PE

docker pull thingsboard/tb-pe-web-report:4.3.0.1PE

docker pull thingsboard/tb-pe-web-ui:4.3.0.1PE

docker pull thingsboard/tb-pe-js-executor:4.3.0.1PE

docker pull thingsboard/tb-pe-http-transport:4.3.0.1PE

docker pull thingsboard/tb-pe-mqtt-transport:4.3.0.1PE

docker pull thingsboard/tb-pe-coap-transport:4.3.0.1PE

docker pull thingsboard/tb-pe-lwm2m-transport:4.3.0.1PE

docker pull thingsboard/tb-pe-snmp-transport:4.3.0.1PE

Step 1. Clone ThingsBoard PE K8S scripts repository

Clone the repository and change the working directory to GCP scripts.

1

2

git clone -b release-4.3.0.1 https://github.com/thingsboard/thingsboard-pe-k8s.git --depth 1

cd thingsboard-pe-k8s/gcp/microservices

Step 2. Define environment variables

Define environment variables that you will use in various commands later in this guide.

We assume you are using Linux. Execute the following command:

1

2

3

4

5

6

7

8

9

10

export GCP_PROJECT=$(gcloud config get-value project)

export GCP_REGION=us-central1

export GCP_ZONE=us-central1

export GCP_ZONE1=us-central1-a

export GCP_ZONE2=us-central1-b

export GCP_ZONE3=us-central1-c

export GCP_NETWORK=default

export TB_CLUSTER_NAME=tb-pe-msa

export TB_DATABASE_NAME=tb-db

echo "You have selected project: $GCP_PROJECT, region: $GCP_REGION, gcp zones: $GCP_ZONE1,$GCP_ZONE2,$GCP_ZONE3, network: $GCP_NETWORK, cluster: $TB_CLUSTER_NAME, database: $TB_DATABASE_NAME"

where:

- first line uses gcloud command to fetch your current GCP project id. We will refer to it later in this guide using $GCP_PROJECT;

- us-central1 is one of the available compute regions. We will refer to it later in this guide using $GCP_REGION;

- default is a default GCP network name; We will refer to it later in this guide using $GCP_NETWORK;

- tb-pe-msa is the name of your cluster. You may input a different name. We will refer to it later in this guide using $TB_CLUSTER_NAME;

- tb-db is the name of your database server. You may input a different name. We will refer to it later in this guide using $TB_DATABASE_NAME;

Step 3. Configure and create GKE cluster

Create a regional cluster distributed across 3 zones with nodes of your preferred machine type.

The example below provisions one e2-standard-4 node per zone (three nodes total), but you can modify the --machine-type and --num-nodes to suit your workload requirements.

For a full list of available machine types and their specifications, refer to the GCP machine types documentation.

Execute the following command (recommended):

1

2

3

4

5

6

7

8

9

gcloud container clusters create $TB_CLUSTER_NAME \

--release-channel stable \

--region $GCP_REGION \

--network=$GCP_NETWORK \

--node-locations $GCP_ZONE1,$GCP_ZONE2,$GCP_ZONE3 \

--enable-ip-alias \

--num-nodes=1 \

--node-labels=role=main \

--machine-type=e2-standard-4

Alternatively, you may use this guide for custom cluster setup.

Step 4. Update the context of kubectl

Update the context of kubectl using command:

1

gcloud container clusters get-credentials $TB_CLUSTER_NAME --region $GCP_REGION

Step 5. Provision Databases

Step 5.1 Google Cloud SQL (PostgreSQL) Instance

5.1 Prerequisites

Enable service networking to allow your K8S cluster connect to the DB instance:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

gcloud services enable servicenetworking.googleapis.com --project=$GCP_PROJECT

gcloud compute addresses create google-managed-services-$GCP_NETWORK \

--global \

--purpose=VPC_PEERING \

--prefix-length=16 \

--network=projects/$GCP_PROJECT/global/networks/$GCP_NETWORK

gcloud services vpc-peerings connect \

--service=servicenetworking.googleapis.com \

--ranges=google-managed-services-$GCP_NETWORK \

--network=$GCP_NETWORK \

--project=$GCP_PROJECT

5.2 Create database server instance

Create the PostgreSQL instance with database version “PostgreSQL 16” and the following recommendations:

- use the same region where your K8S cluster GCP_REGION is located;

- use the same VPC network where your K8S cluster GCP_REGION is located;

- use private IP address to connect to your instance and disable public IP address;

- use highly available DB instance for production and single zone instance for development clusters;

- use at least 2 vCPUs and 7.5 GB RAM, which is sufficient for most of the workloads. You may scale it later if needed;

Execute the following command:

1

2

3

4

5

6

gcloud beta sql instances create $TB_DATABASE_NAME \

--database-version=POSTGRES_16 \

--region=$GCP_REGION --availability-type=regional \

--no-assign-ip --network=projects/$GCP_PROJECT/global/networks/$GCP_NETWORK \

--cpu=2 --memory=7680MB

Alternatively, you may follow this guide to configure your database.

Note your IP address (YOUR_DB_IP_ADDRESS) from command output. Successful command output should look similar to this:

1

2

3

Created [https://sqladmin.googleapis.com/sql/v1beta4/projects/YOUR_PROJECT_ID/instances/$TB_DATABASE_NAME].

NAME DATABASE_VERSION LOCATION TIER PRIMARY_ADDRESS PRIVATE_ADDRESS STATUS

$TB_DATABASE_NAME POSTGRES_16 us-central1-f db-custom-2-7680 35.192.189.68 - RUNNABLE

5.3 Set database password

Set password for your new database server instance:

1

2

3

gcloud sql users set-password postgres \

--instance=$TB_DATABASE_NAME \

--password=secret

where:

- instance is the name of your database server instance;

- secret is the password. You should input a different password. We will refer to it later in this guide using YOUR_DB_PASSWORD;

5.4 Create database

Create “thingsboard” database inside your postgres database server instance:

1

gcloud sql databases create thingsboard --instance=$TB_DATABASE_NAME

where, thingsboard is the name of your database. You may input a different name. We will refer to it later in this guide using YOUR_DB_NAME;

Step 5.2 Cassandra (optional)

Using Cassandra is an optional step. We recommend to use Cassandra if you plan to insert more than 5K data points per second or would like to optimize storage space.

Provision additional node groups

Provision additional node groups that will be hosting Cassandra instances. You may change the machine type. At least 4 vCPUs and 16GB of RAM is recommended.

We will create 3 separate node pools with 1 node per zone. Since we plan to use zonal disks, we don’t want k8s to launch a pod on the node where the corresponding disk is not available. Those zones will have the same node label. We will use this label to target deployment of our stateful set.

1

2

3

4

5

6

gcloud container node-pools create cassandra1 --cluster=$TB_CLUSTER_NAME --zone=$GCP_ZONE --node-locations=$GCP_ZONE1 \

--node-labels=role=cassandra --num-nodes=1 --min-nodes=1 --max-nodes=1 --machine-type=e2-standard-4

gcloud container node-pools create cassandra2 --cluster=$TB_CLUSTER_NAME --zone=$GCP_ZONE --node-locations=$GCP_ZONE2 \

--node-labels=role=cassandra --num-nodes=1 --min-nodes=1 --max-nodes=1 --machine-type=e2-standard-4

gcloud container node-pools create cassandra3 --cluster=$TB_CLUSTER_NAME --zone=$GCP_ZONE --node-locations=$GCP_ZONE3 \

--node-labels=role=cassandra --num-nodes=1 --min-nodes=1 --max-nodes=1 --machine-type=e2-standard-4

Deploy Cassandra stateful set

Create ThingsBoard namespace:

1

2

kubectl apply -f tb-namespace.yml

kubectl config set-context $(kubectl config current-context) --namespace=thingsboard

Deploy Cassandra to new node groups:

1

kubectl apply -f receipts/cassandra.yml

The startup of Cassandra cluster may take few minutes. You may monitor the process using:

1

kubectl get pods

Update DB settings

Edit the ThingsBoard DB settings file and add Cassandra settings

1

2

3

echo " DATABASE_TS_TYPE: cassandra" >> tb-node-db-configmap.yml

echo " CASSANDRA_URL: cassandra:9042" >> tb-node-db-configmap.yml

echo " CASSANDRA_LOCAL_DATACENTER: $GCP_REGION" >> tb-node-db-configmap.yml

Check that the settings are updated:

1

cat tb-node-db-configmap.yml | grep DATABASE_TS_TYPE

Expected output:

1

DATABASE_TS_TYPE: cassandra

Create keyspace

Create thingsboard keyspace using following command:

1

2

3

4

5

6

kubectl exec -it cassandra-0 -- bash -c "cqlsh -e \

\"CREATE KEYSPACE IF NOT EXISTS thingsboard \

WITH replication = { \

'class' : 'NetworkTopologyStrategy', \

'us-central1' : '3' \

};\""

Step 6. Configure license key

We assume you have already chosen your subscription plan or decided to purchase a perpetual license. If not, please navigate to pricing page to select the best license option for your case and get your license. See How-to get pay-as-you-go subscription or How-to get perpetual license for more details.

Create docker secret with your license key:

1

2

export TB_LICENSE_KEY=PUT_YOUR_LICENSE_KEY_HERE

kubectl create -n thingsboard secret generic tb-license --from-literal=license-key=$TB_LICENSE_KEY

Step 7. Installation

Edit “tb-node-db-configmap.yml” and replace YOUR_DB_IP_ADDRESS and YOUR_DB_PASSWORD with the values you have obtained during step 5.

1

nano tb-node-db-configmap.yml

Execute the following command to run installation:

1

./k8s-install-tb.sh --loadDemo

where:

--loadDemo- optional argument. Whether to load additional demo data.

After this command finish you should see the next line in the console:

1

Installation finished successfully!

Step 8. Starting

Execute the following command to deploy thirdparty compnents (zookeeper, kafka, redis) and main ThingsBoard microservices: tb-node, tb-web-ui and js-executors:

1

./k8s-deploy-resources.sh

After few minutes you may call kubectl get pods. If everything went fine, you should be able to

see tb-node-0 pod in the READY state.

You should also deploy the transport microservices. Omit the protocols that you don’t use in order to save resources:

HTTP Transport (optional)

1

kubectl apply -f transports/tb-http-transport.yml

MQTT transport (optional)

1

kubectl apply -f transports/tb-mqtt-transport.yml

CoAP transport (optional)

1

kubectl apply -f transports/tb-coap-transport.yml

LwM2M transport (optional)

1

kubectl apply -f transports/tb-lwm2m-transport.yml

SNMP transport (optional)

1

kubectl apply -f transports/tb-snmp-transport.yml

Step 9. Configure Load Balancers

9.1 Configure HTTP(S) Load Balancer

Configure HTTP(S) Load Balancer to access web interface of your ThingsBoard instance. Basically you have 3 possible options of configuration:

- http - Load Balancer without HTTPS support. Recommended for development. The only advantage is simple configuration and minimum costs. May be good option for development server but definitely not suitable for production.

- https - Load Balancer with HTTPS support. Recommended for production. Acts as an SSL termination point. You may easily configure it to issue and maintain a valid SSL certificate. Automatically redirects all non-secure (HTTP) traffic to secure (HTTPS) port.

- transparent - Load Balancer that simply forwards traffic to http and https ports of the ThingsBoard. Requires you to provision and maintain valid SSL certificate. Useful for production environments that can’t tolerate the LB to be an SSL termination point.

See links/instructions below on how to configure each of the suggested options.

HTTP Load Balancer

Execute the following command to deploy plain http load balancer:

1

kubectl apply -f receipts/http-load-balancer.yml

The process of load balancer provisioning may take some time. You may periodically check the status of the load balancer using the following command:

1

kubectl get ingress

Once provisioned, you should see similar output:

1

2

NAME CLASS HOSTS ADDRESS PORTS AGE

tb-http-loadbalancer <none> * 34.111.24.134 80 7m25s

Now, you may use the address (the one you see instead of 34.111.24.134 in the command output) to access HTTP web UI (port 80) and connect your devices via HTTP API Use the following default credentials:

- System Administrator: sysadmin@thingsboard.org / sysadmin

- Tenant Administrator: tenant@thingsboard.org / tenant

- Customer User: customer@thingsboard.org / customer

HTTPS Load Balancer

The process of configuring the load balancer using Google-managed SSL certificates is described on the official documentation page. The instructions below are extracted from the official documentation. Make sure you read prerequisites carefully before proceeding.

1

gcloud compute addresses create thingsboard-http-lb-address --global

Replace the PUT_YOUR_DOMAIN_HERE with valid domain name in the https-load-balancer.yml file:

1

nano receipts/https-load-balancer.yml

Execute the following command to deploy secure http load balancer:

1

kubectl apply -f receipts/https-load-balancer.yml

The process of load balancer provisioning may take some time. You may periodically check the status of the load balancer using the following command:

1

kubectl get ingress

Once provisioned, you should see similar output:

1

2

NAME CLASS HOSTS ADDRESS PORTS AGE

tb-https-loadbalancer <none> * 34.111.24.134 80 7m25s

Now, assign the domain name you have used to the load balancer IP address (the one you see instead of 34.111.24.134 in the command output).

Check that the domain name is configured correctly using dig:

1

dig YOUR_DOMAIN_NAME

Sample output:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

; <<>> DiG 9.11.3-1ubuntu1.16-Ubuntu <<>> YOUR_DOMAIN_NAME

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 12513

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 65494

;; QUESTION SECTION:

;YOUR_DOMAIN_NAME. IN A

;; ANSWER SECTION:

YOUR_DOMAIN_NAME. 36 IN A 34.111.24.134

;; Query time: 0 msec

;; SERVER: 127.0.0.53#53(127.0.0.53)

;; WHEN: Fri Nov 19 13:00:00 EET 2021

;; MSG SIZE rcvd: 74

Once assigned, wait for the Google-managed certificate to finish provisioning. This might take up to 60 minutes. You can check the status of the certificate using the following command:

1

kubectl describe managedcertificate managed-cert

Certificate will be eventually provisioned if you have configured domain records properly.

Once provisioned, you may use your domain name to access Web UI (over https) and connect your devices via HTTP API.

9.2. Configure MQTT Load Balancer (Optional)

Configure MQTT load balancer if you plan to use MQTT protocol to connect devices.

Create TCP load balancer using following command:

1

kubectl apply -f receipts/mqtt-load-balancer.yml

The load balancer will forward all TCP traffic for ports 1883 and 8883.

MQTT over SSL

This type of the load balancer requires you to provision and maintain valid SSL certificate on your own. Follow the generic MQTT over SSL guide to configure required environment variables in the transport/tb-mqtt-transport.yml file.

9.3. Configure CoAP Load Balancer (Optional)

Configure CoAP load balancer if you plan to use CoAP protocol to connect devices.

Create CoAP load balancer using following command:

1

kubectl apply -f receipts/coap-load-balancer.yml

The load balancer will forward all UDP traffic for the following ports:

- 5683 - CoAP server non-secure port

- 5684 - CoAP server secure DTLS port.

CoAP over DTLS

This type of the load balancer requires you to provision and maintain valid SSL certificate on your own. Follow the generic CoAP over DTLS guide to configure required environment variables in the transport/tb-coap-transport.yml file.

9.4. Configure LwM2M Load Balancer (Optional)

Configure LwM2M load balancer if you plan to use LwM2M protocol to connect devices.

Create LwM2M UDP load balancer using following command:

1

kubectl apply -f receipts/lwm2m-load-balancer.yml

The load balancer will forward all UDP traffic for the following ports:

- 5685 - LwM2M server non-secure port.

- 5686 - LwM2M server secure DTLS port.

- 5687 - LwM2M bootstrap server DTLS port.

- 5688 - LwM2M bootstrap server secure DTLS port.

LwM2M over DTLS

This type of the load balancer requires you to provision and maintain valid SSL certificate on your own. Follow the generic LwM2M over DTLS guide to configure required environment variables in the transport/tb-lwm2m-transport.yml file.

9.5. Configure Edge Load Balancer (Optional)

Configure the Edge load balancer if you plan to connect Edge instances to your ThingsBoard server.

To create a TCP Edge load balancer, apply the provided YAML file using the following command:

1

kubectl apply -f receipts/edge-load-balancer.yml

The load balancer will forward all TCP traffic on port 7070.

After the Edge load balancer is provisioned, you can connect Edge instances to your ThingsBoard server.

Before connecting Edge instances, you need to obtain the external IP address of the Edge load balancer. To retrieve this IP address, execute the following command:

1

kubectl get services | grep "EXTERNAL-IP\|tb-edge-loadbalancer"

You should see output similar to the following:

1

2

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

tb-edge-loadbalancer LoadBalancer 10.44.5.255 104.154.29.225 7070:30783/TCP 85m

Make a note of the external IP address and use it later in the Edge connection parameters as CLOUD_RPC_HOST.

Step 10. Configure Trendz (Optional)

10.1. Pull Trendz images from docker hub

Run the following commands to verify that you can pull the images from the Docker hub.

1

2

docker pull thingsboard/trendz:1.15.0.4

docker pull thingsboard/trendz-python-executor:1.15.0.4

10.2. Create a Trendz database in the existing Google Cloud SQL (PostgreSQL) Instance

Edit “trendz/trendz-secret.yml” and replace YOUR_DB_IP_ADDRESS and YOUR_DB_PASSWORD and apply Kubernetes Job:

1

2

kubectl apply -f ./trendz/trendz-secret.yml

kubectl apply -f ./trendz/trendz-create-db.yml

You can see logs if you run the next command:

1

kubectl logs job/trendz-create-db -n thingsboard

10.3. Trendz starting

Execute the following command to run the initial setup of the database. This command will launch short-living ThingsBoard pod to provision necessary DB tables, indexes, etc

1

./k8s-deploy-trendz.sh

After this command finish you should see the next line in the console:

1

Trendz installed successfully!

Step 11. Using

Now you can open ThingsBoard web interface in your browser using IP address of the load balancer.

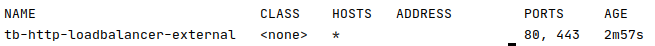

You can see DNS name (the ADDRESS column) of the HTTP load-balancer using command:

1

kubectl get ingress

You should see the similar picture:

To connect to the cluster via MQTT or COAP you’ll need to get corresponding service, you can do it with command:

1

kubectl get service

You should see the similar picture:

There are two load-balancers:

- tb-mqtt-loadbalancer - for TCP (MQTT) protocol

- tb-udp-loadbalancer - for UDP (COAP/LwM2M) protocol

Use EXTERNAL-IP field of the load-balancers to connect to the cluster.

Use the following default credentials:

- System Administrator: sysadmin@thingsboard.org / sysadmin

If you installed database with demo data (using --loadDemo flag) you can also use the following credentials:

- Tenant Administrator: tenant@thingsboard.org / tenant

- Customer User: customer@thingsboard.org / customer

In case of any issues you can examine service logs for errors. For example to see ThingsBoard node logs execute the following command:

1

kubectl logs -f tb-node-0

Or use kubectl get pods to see the state of the pod.

Or use kubectl get services to see the state of all the services.

Or use kubectl get deployments to see the state of all the deployments.

See kubectl Cheat Sheet command reference for details.

Execute the following command to delete tb-node and load-balancers:

1

./k8s-delete-resources.sh

Execute the following command to delete all data (including database):

1

./k8s-delete-all.sh

Upgrading

Upgrading to new ThingsBoard version

In case when database upgrade is needed, execute the following commands:

1

2

3

./k8s-delete-resources.sh

./k8s-upgrade-tb.sh --fromVersion=[FROM_VERSION]

./k8s-deploy-resources.sh

Where:

FROM_VERSION- from which version upgrade should be started. See Upgrade Instructions for validfromVersionvalues. Note, that you have to upgrade versions one by one (for example 3.6.1 -> 3.6.2 -> 3.6.3 etc).

Upgrading to new Trendz version (Optional)

In case you would like to upgrade, please pull the latest changes from master branch:

1

git pull origin master

Then, execute the following commands:

1

./k8s-upgrade-trendz.sh

Note, that you can upgrade Trendz to the latest version from any other (for example 1.12.0 -> 1.15.0 etc).

Next steps

-

Getting started guides - These guides provide quick overview of main ThingsBoard features. Designed to be completed in 15-30 minutes.

-

Connect your device - Learn how to connect devices based on your connectivity technology or solution.

-

Data visualization - These guides contain instructions on how to configure complex ThingsBoard dashboards.

-

Data processing & actions - Learn how to use ThingsBoard Rule Engine.

-

IoT Data analytics - Learn how to use rule engine to perform basic analytics tasks.

-

Advanced features - Learn about advanced ThingsBoard features.

-

Contribution and Development - Learn about contribution and development in ThingsBoard.