- Prerequisites

- Step 1. Open TBMQ K8S scripts repository

- Step 2. Configure and create EKS cluster

- Step 3. Create AWS load-balancer controller

- Step 4. Amazon PostgreSQL DB Configuration

- Step 5. Amazon MSK Configuration

- Step 6. Amazon ElastiCache (Redis) Configuration

- Step 7. Configure links to the Kafka/Postgres/Redis

- Step 8. Installation

- Step 9. Starting

- Step 10. Configure Load Balancers

- Step 11. Validate the setup

- Upgrading

- Cluster deletion

- Next steps

This guide will help you to set up TBMQ in AWS EKS.

Prerequisites

Install and configure tools

To deploy TBMQ on EKS cluster you’ll need to install kubectl, eksctl and awscli tools.

Afterward you need to configure Access Key, Secret Key and default region. To get Access and Secret keys please follow this guide. The default region should be the ID of the region where you’d like to deploy the cluster.

1

aws configure

Step 1. Open TBMQ K8S scripts repository

1

2

git clone -b release-2.2.0 https://github.com/thingsboard/tbmq.git

cd tbmq/k8s/aws

Step 2. Configure and create EKS cluster

In the cluster.yml file you can find suggested cluster configuration.

Here are the fields you can change depending on your needs:

region- should be the AWS region where you want your cluster to be located (the default value isus-east-1)availabilityZones- should specify the exact IDs of the region’s availability zones (the default value is[us-east-1a,us-east-1b,us-east-1c])instanceType- the type of the instance with TBMQ node (the default value ism7a.large)

Note: If you don’t make any changes to instanceType and desiredCapacity fields, the EKS will deploy 2 nodes of type m7a.large.

Command to create AWS cluster:

1

eksctl create cluster -f cluster.yml

Step 3. Create AWS load-balancer controller

Once the cluster is ready you’ll need to create AWS load-balancer controller. You can do it by following this guide. The cluster provisioning scripts will create several load balancers:

- tb-broker-http-loadbalancer - AWS ALB that is responsible for the web UI and REST API;

- tb-broker-mqtt-loadbalancer - AWS NLB that is responsible for the MQTT communication.

Provisioning of the AWS load-balancer controller is a very important step that is required for those load balancers to work properly.

Step 4. Amazon PostgreSQL DB Configuration

You’ll need to set up PostgreSQL on Amazon RDS. One of the ways to do it is by following this guide.

Note: Some recommendations:

- Make sure your PostgreSQL version is 16.x;

- Use ‘Production’ template for high availability. It enables a lot of useful settings by default;

- Consider creation of custom parameters group for your RDS instance. It will make change of DB parameters easier;

- Consider deployment of the RDS instance into private subnets. This way it will be nearly impossible to accidentally expose it to the internet.

- You may also change

usernamefield and set or auto-generatepasswordfield (keep your postgresql password in a safe place).

Note: Make sure your database is accessible from the cluster, one of the way to achieve this is to create

the database in the same VPC and subnets as TBMQ cluster and use

eksctl-tbmq-cluster-ClusterSharedNodeSecurityGroup-* security group. See screenshots below.

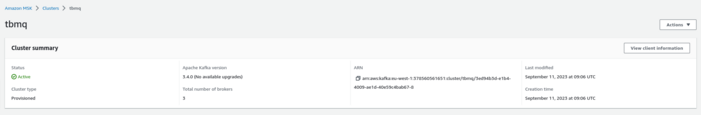

Step 5. Amazon MSK Configuration

You’ll need to set up Amazon MSK.

To do so you need to open AWS console, MSK submenu, press Create cluster button and choose Custom create mode.

You should see the similar image:

Note: Some recommendations:

- Apache Kafka version can be safely set to the 3.7.0 version as TBMQ is fully tested on it;

- Use m5.large or similar instance types;

- Consider creation of custom cluster configuration for your MSK. It will make change of Kafka parameters easier;

- Use default ‘Monitoring’ settings or enable ‘Enhanced topic-level monitoring’.

Note: Make sure your MSK instance is accessible from TBMQ cluster. The easiest way to achieve this is to deploy the MSK instance in the same VPC. We also recommend to use private subnets. This way it will be nearly impossible to accidentally expose it to the internet;

At the end, carefully review the whole configuration of the MSK and then finish the cluster creation.

Step 6. Amazon ElastiCache (Redis) Configuration

You need to set up ElastiCache for Redis. TBMQ uses cache to store messages for DEVICE persistent clients, to improve performance and avoid frequent DB reads (see below for more details).

It is useful when clients connect to TBMQ with the authentication enabled. For every connection, the request is made to find MQTT client credentials that can authenticate the client. Thus, there could be an excessive amount of requests to be processed for a large number of connecting clients at once.

Please open AWS console and navigate to ElastiCache->Redis clusters->Create Redis cluster.

Note: Some recommendations:

- Specify Redis Engine version 7.x and node type with at least 1 GB of RAM;

- Make sure your Redis cluster is accessible from the TBMQ cluster.

The easiest way to achieve this is to deploy the Redis cluster in the same VPC.

We also recommend to use private subnets. Use

eksctl-tbmq-cluster-ClusterSharedNodeSecurityGroup-*security group; - Disable automatic backups.

Step 7. Configure links to the Kafka/Postgres/Redis

Amazon RDS PostgreSQL

Once the database switch to the ‘Available’ state, on AWS Console get the Endpoint of the RDS PostgreSQL and paste it to

SPRING_DATASOURCE_URL in the tb-broker-db-configmap.yml instead of RDS_URL_HERE part.

Also, you’ll need to set SPRING_DATASOURCE_USERNAME and SPRING_DATASOURCE_PASSWORD with PostgreSQL username and password corresponding.

Amazon MSK

Once the MSK cluster switch to the ‘Active’ state, to get the list of brokers execute the next command:

1

aws kafka get-bootstrap-brokers --region us-east-1 --cluster-arn $CLUSTER_ARN

Where $CLUSTER_ARN is the Amazon Resource Name (ARN) of the MSK cluster:

You’ll need to paste data from the BootstrapBrokerString to the TB_KAFKA_SERVERS environment variable in the tb-broker.yml file.

Otherwise, click View client information seen on the screenshot above. Copy bootstrap server information in plaintext.

Amazon ElastiCache

Once the Redis cluster switch to the ‘Available’ state, open the ‘Cluster details’ and copy Primary endpoint without “:6379” port suffix, it`s YOUR_REDIS_ENDPOINT_URL_WITHOUT_PORT.

Edit tb-broker-cache-configmap.yml and replace YOUR_REDIS_ENDPOINT_URL_WITHOUT_PORT.

Step 8. Installation

Execute the following command to run installation:

1

./k8s-install-tbmq.sh

After this command finish you should see the next line in the console:

1

INFO o.t.m.b.i.ThingsboardMqttBrokerInstallService - Installation finished successfully!

Step 9. Starting

Execute the following command to deploy the broker:

1

./k8s-deploy-tbmq.sh

After a few minutes, you may execute the next command to check the state of all pods.

1

kubectl get pods

If everything went fine, you should be able to see tb-broker-0 and tb-broker-1 pods. Every pod should be in the READY state.

Step 10. Configure Load Balancers

10.1 Configure HTTP(S) Load Balancer

Configure HTTP(S) Load Balancer to access web interface of your TBMQ instance. Basically you have 2 possible options of configuration:

- http - Load Balancer without HTTPS support. Recommended for development. The only advantage is simple configuration and minimum costs. May be good option for development server but definitely not suitable for production.

- https - Load Balancer with HTTPS support. Recommended for production. Acts as an SSL termination point. You may easily configure it to issue and maintain a valid SSL certificate. Automatically redirects all non-secure (HTTP) traffic to secure (HTTPS) port.

See links/instructions below on how to configure each of the suggested options.

HTTP Load Balancer

Execute the following command to deploy plain http load balancer:

1

kubectl apply -f receipts/http-load-balancer.yml

The process of load balancer provisioning may take some time. You may periodically check the status of the load balancer using the following command:

1

kubectl get ingress

Once provisioned, you should see similar output:

1

2

NAME CLASS HOSTS ADDRESS PORTS AGE

tb-broker-http-loadbalancer <none> * k8s-thingsbo-tbbroker-000aba1305-222186756.eu-west-1.elb.amazonaws.com 80 3d1h

HTTPS Load Balancer

Use AWS Certificate Manager to create or import SSL certificate. Note your certificate ARN.

Edit the load balancer configuration and replace YOUR_HTTPS_CERTIFICATE_ARN with your certificate ARN:

1

nano receipts/https-load-balancer.yml

Execute the following command to deploy plain https load balancer:

1

kubectl apply -f receipts/https-load-balancer.yml

10.2 Configure MQTT Load Balancer

Configure MQTT load balancer to be able to use MQTT protocol to connect devices.

Create TCP load balancer using following command:

1

kubectl apply -f receipts/mqtt-load-balancer.yml

The load balancer will forward all TCP traffic for ports 1883 and 8883.

One-way TLS

The simplest way to configure MQTTS is to make your MQTT load balancer (AWS NLB) to act as a TLS termination point. This way we set up the one-way TLS connection, where the traffic between your devices and load balancers is encrypted, and the traffic between your load balancer and TBMQ is not encrypted. There should be no security issues, since the ALB/NLB is running in your VPC. The only major disadvantage of this option is that you can’t use “X.509 certificate” MQTT client credentials, since information about client certificate is not transferred from the load balancer to the TBMQ.

To enable the one-way TLS:

Use AWS Certificate Manager to create or import SSL certificate. Note your certificate ARN.

Edit the load balancer configuration and replace YOUR_MQTTS_CERTIFICATE_ARN with your certificate ARN:

1

nano receipts/mqtts-load-balancer.yml

Execute the following command to deploy plain MQTTS load balancer:

1

kubectl apply -f receipts/mqtts-load-balancer.yml

Two-way TLS

The more complex way to enable MQTTS is to obtain valid (signed) TLS certificate and configure it in the TBMQ. The main advantage of this option is that you may use it in combination with “X.509 certificate” MQTT client credentials.

To enable the two-way TLS:

Follow this guide to create a .pem file with the SSL certificate. Store the file as server.pem in the working directory.

You’ll need to create a config-map with your PEM file, you can do it by calling command:

1

2

3

4

kubectl create configmap tbmq-mqtts-config \

--from-file=server.pem=YOUR_PEM_FILENAME \

--from-file=mqttserver_key.pem=YOUR_PEM_KEY_FILENAME \

-o yaml --dry-run=client | kubectl apply -f -

- where YOUR_PEM_FILENAME is the name of your server certificate file.

- where YOUR_PEM_KEY_FILENAME is the name of your server certificate private key file.

Then, uncomment all sections in the ‘tb-broker.yml’ file that are marked with “Uncomment the following lines to enable two-way MQTTS”.

Execute command to apply changes:

1

kubectl apply -f tb-broker.yml

Finally, deploy the “transparent” load balancer:

1

kubectl apply -f receipts/mqtt-load-balancer.yml

Step 11. Validate the setup

Now you can open TBMQ web interface in your browser using DNS name of the load balancer.

You can get DNS name of the load-balancers using the next command:

1

kubectl get ingress

You should see the similar picture:

1

2

NAME CLASS HOSTS ADDRESS PORTS AGE

tb-broker-http-loadbalancer <none> * k8s-thingsbo-tbbroker-000aba1305-222186756.eu-west-1.elb.amazonaws.com 80 3d1h

Use ADDRESS field of the tb-broker-http-loadbalancer to connect to the cluster.

You should see TBMQ login page. Use the following default credentials for System Administrator:

Username:

1

sysadmin@thingsboard.org

Password:

1

sysadmin

On the first user log-in you will be asked to change the default password to the preferred one and then re-login using the new credentials.

Validate MQTT access

To connect to the cluster via MQTT you will need to get corresponding service IP. You can do this with the command:

1

kubectl get services

You should see the similar picture:

1

2

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

tb-broker-mqtt-loadbalancer LoadBalancer 10.100.119.170 k8s-thingsbo-tbbroker-b9f99d1ab6-1049a98ba4e28403.elb.eu-west-1.amazonaws.com 1883:30308/TCP,8883:31609/TCP 6m58s

Use EXTERNAL-IP field of the load-balancer to connect to the cluster via MQTT protocol.

Troubleshooting

In case of any issues you can examine service logs for errors. For example to see TBMQ logs execute the following command:

1

kubectl logs -f tb-broker-0

Use the next command to see the state of all statefulsets.

1

kubectl get statefulsets

See kubectl Cheat Sheet command reference for more details.

Upgrading

Review the release notes and upgrade instruction for detailed information on the latest changes.

If there are no Upgrade to x.x.x notes for your version, you can proceed directly with the general upgrade instructions.

If the documentation does not cover the specific upgrade instructions for your case, please contact us so we can provide further guidance.

Backup and restore (Optional)

While backing up your PostgreSQL database is highly recommended, it is optional before proceeding with the upgrade. For further guidance, follow the next instructions.

Upgrade to 2.2.0

In this release, the MQTT authentication mechanism was migrated from YAML/env configuration into the database. During upgrade, TBMQ needs to know which authentication providers are enabled in your deployment. This information is provided through environment variables passed to the upgrade pod.

The upgrade script requires a file named database-setup.yml that explicitly defines these variables.

Environment variables from your tb-broker.yml file are not applied during the upgrade — only the values in database-setup.yml will be used.

Tips If you use only Basic authentication, set

SECURITY_MQTT_SSL_ENABLED=false. If you use only X.509 authentication, setSECURITY_MQTT_BASIC_ENABLED=falseandSECURITY_MQTT_SSL_ENABLED=true.

Supported variables

SECURITY_MQTT_BASIC_ENABLED(true|false)SECURITY_MQTT_SSL_ENABLED(true|false)SECURITY_MQTT_SSL_SKIP_VALIDITY_CHECK_FOR_CLIENT_CERT(true|false) — usuallyfalse.

Once the file is prepared and the values verified, proceed with the upgrade process.

Upgrade to 2.1.0

TBMQ v2.1.0 introduces enhancements, including a new Integration Executor microservice and bumped versions for third-party services.

Add Integration Executor microservice

This release adds support for external integrations via the new Integration Executor microservice.

To retrieve the latest configuration files, including those for Integration Executors, pull the updates from the release branch. Follow the steps outlined in the run upgrade instructions up to the execution of the upgrade script (do not execute .sh commands yet).

The cluster.yml file has been updated to include the new managed node group specifically for Integration Executor pods.

1

2

3

4

5

6

7

8

9

- name: tbmq-ie

instanceType: m7a.large

desiredCapacity: 2

maxSize: 2

minSize: 1

labels: { role: tbmq-ie }

ssh:

allow: true

publicKeyName: 'dlandiak' # Note, use your own public key name here

To create it, execute the following command:

1

eksctl create nodegroup --config-file=cluster.yml

You may choose to skip creating dedicated instances for Integration Executors.

If so, you can skip this step, but you must update the nodeSelector section in the tbmq-ie.yml file accordingly.

1

2

nodeSelector:

role: tbmq-ie

Change the role from “tbmq-ie” to “tbmq” to deploy Integration Executor pods on the same AWS EC2 instances as the TBMQ pods.

Update third-party services

With v2.1.0, TBMQ updates the versions of key third-party dependencies, including Redis, PostgreSQL, and Kafka. You can review the changes by visiting the following link.

| Service | Previous Version | Updated Version |

|---|---|---|

| Redis | 7.0 | 7.2.5 |

| PostgreSQL | 15.x | 16.x |

| Kafka | 3.5.1 | 3.7.0 |

We recommend aligning your environment with the updated third-party versions to ensure full compatibility with this release. Alternatively, you may proceed without upgrading, but compatibility is only guaranteed with the recommended versions.

After addressing third-party service versions as needed, you can continue with the remaining steps of the upgrade process.

Upgrade to 2.0.0

For the TBMQ v2.0.0 upgrade, if you haven’t installed Redis yet, please follow step 6 to complete the installation. Only then you can proceed with the upgrade.

Run upgrade

In case you would like to upgrade, please pull the recent changes from the latest release branch:

1

git pull origin release-2.2.0

Note: Make sure custom changes of yours if available are not lost during the merge process.

If you encounter conflicts during the merge process that are not related to your changes, we recommend accepting all the new changes from the remote branch.

You could revert the merge process by executing the following:

1

git merge --abort

And repeat the merge by accepting theirs changes.

1

git pull origin release-2.2.0 -X theirs

There are several useful options for the default merge strategy:

- -X ours - this option forces conflicting hunks to be auto-resolved cleanly by favoring our version.

- -X theirs - this is the opposite of ours. See more details here.

After that, execute the following command:

|

Where |

Note: You may optionally stop the TBMQ pods while you run the upgrade of the database with the below command.

1

./k8s-delete-tbmq.sh

This will cause downtime, but will make sure that the DB state will be consistent after the update. Most of the updates do not require the TBMQ to be stopped.

Once completed, execute deployment of the resources again. This will cause rollout restart of the TBMQ with the newest version.

1

./k8s-deploy-tbmq.sh

Cluster deletion

Execute the following command to delete TBMQ nodes:

1

./k8s-delete-tbmq.sh

Execute the following command to delete all TBMQ nodes and configmaps:

1

./k8s-delete-all.sh

Execute the following command to delete the EKS cluster (you should change the name of the cluster and the region if those differ):

1

eksctl delete cluster -r us-east-1 -n tbmq -w

Next steps

-

Getting started guide - This guide provide quick overview of TBMQ.

-

Security guide - Learn how to enable authentication and authorization for MQTT clients.

-

Configuration guide - Learn about TBMQ configuration files and parameters.

-

MQTT client type guide - Learn about TBMQ client types.

-

Integration with ThingsBoard - Learn about how to integrate TBMQ with ThingsBoard.